Parsing Protobuf Definitions with Tree-sitter

If you work with Protocol Buffers (protobuf), you can really save time,

boredom, and headache by parsing your definitions to build tools and generate

code.

The usual tool for doing that is protoc. It supports plugins to generate

output of various kinds: language bindings, documentation etc. But, if you want

to do anything custom, you are faced with either using something limited like

protoc-gen-gotemplate or

writing your own plugin. protoc-gen-gotemplate works well, but you can’t

build complex logic into the workflow. You are limited to what is possible in a

simple Go template.

It’s also possible to use protoreflect from Go to process the compiled results

at runtime. This is painful. Really painful.

So, at work, we had made limited use of the protobuf definitions other than

for their main purpose and for documentation and package configuration via

custom options (these are supported in protobuf). Writing the protoreflect

code to make that work is not something I want to repeat.

Then I recently revamped my editor setup and moved from

Vim to Neovim. In the process I

realized how awesome the

Tree-sitter parsing library is

and that it probably was going to support extracting everything I wanted to get

from our protobuf definitions. Neovim uses Tree-sitter extensively.

Why This Matters

Our evented and event-sourced backend at Mozi relies on

protobuf for schema definitions and serialization of events. We use these

same schemas everywhere from the frontend all the way to the backend. This

means our whole system is working on the exact same entity definitions

throughout. Good Stuff™.

In Go, the bindings are not really native structs and require a lot of

GetXYZ() and GetValue() calls chained with nil checking to work around the

fact that nil and zero values are encoded the same way in Protobuf. You also

can’t use them in conjunction with anything that uses struct tags because you

can’t apply tags. I am told by the mobile devs that the Swift bindings are

similarly unfriendly.

We use a mapping layer to paper over this and to make these easier to work with in in our Go code, in data stores, and with off-the-shelf libraries.

We were maintaining custom mappings by hand. That’s a waste of time and even getting GPT to write the transformations back and forth is annoying, and invariably requires tweaking. So I wanted a solution that was much more automatic and repeatable.

Here’s what I did.

Example Definition

First we’ll have a look at one protobuf definition. Then we’ll talk about

extracting the information we want from it.

Imagine that we’re working with the following fairly typical protobuf message

definition. We want to be able to extract the name of the message, the enum

names and values, and the fields and their types. Here we are not particularly

interested in the field numbers, but you could also extract them, of course.

syntax = "proto3";

package entities;

import "google/protobuf/wrappers.proto";

// A BlogPost represents a single post on the blog.

message BlogPost {

// Some custom configuration options

// ...

// Each blog post is assigned a type so we can identify how it will be used

enum PostType {

POST_TYPE_NOT_SET = 0;

POST_TYPE_ARTICLE = 1;

POST_TYPE_PAGE = 2;

POST_TYPE_SPLASH = 3;

}

google.protobuf.StringValue post_id = 1; // The ID of the blog post

PostType post_type = 2; // What kind of post is this?

google.protobuf.StringValue title = 3; // The title of the post

google.protobuf.StringValue body = 4; // The actual contents of the post

}This typical message contains a single enum type and 4 fields. Real life

messages will contain many more fields, but this is enough for us in this post.

Looking at this, we could hack something to parse this fairly simple example

using regexes or other string matching. But it would end up being pretty

brittle. You could even break most trivial parsers by commenting out one or

more lines of valid code with /* */ style. So let’s take a look at how we

could get the data we need using a real parser: Tree-sitter.

Parsing and Querying the Document

Tree-sitter has numerous bindings that enable parsing programming languages and

data formats and protobuf is supported. There are also good Go bindings for

Tree-sitter that make it possible to interact with all of this in a

straightforward way from Go code. We’ll use the

github.com/smacker/go-tree-sitter

package and the associated protobuf

bindings.

The library supports various methods of access to the parsed tree, but the one we’ll use here is a query expression that will extract only the data we care about.

We can use an S expression to query the parsed tree. But, we need to understand what the parsed tree looks like before we can query it. How do we visualize what is in the AST? One way would be to use the online playground, but that lacks support for Protobuf. Because I was already working in Neovim, I decided to use the excellent built-in visualization and query tools!

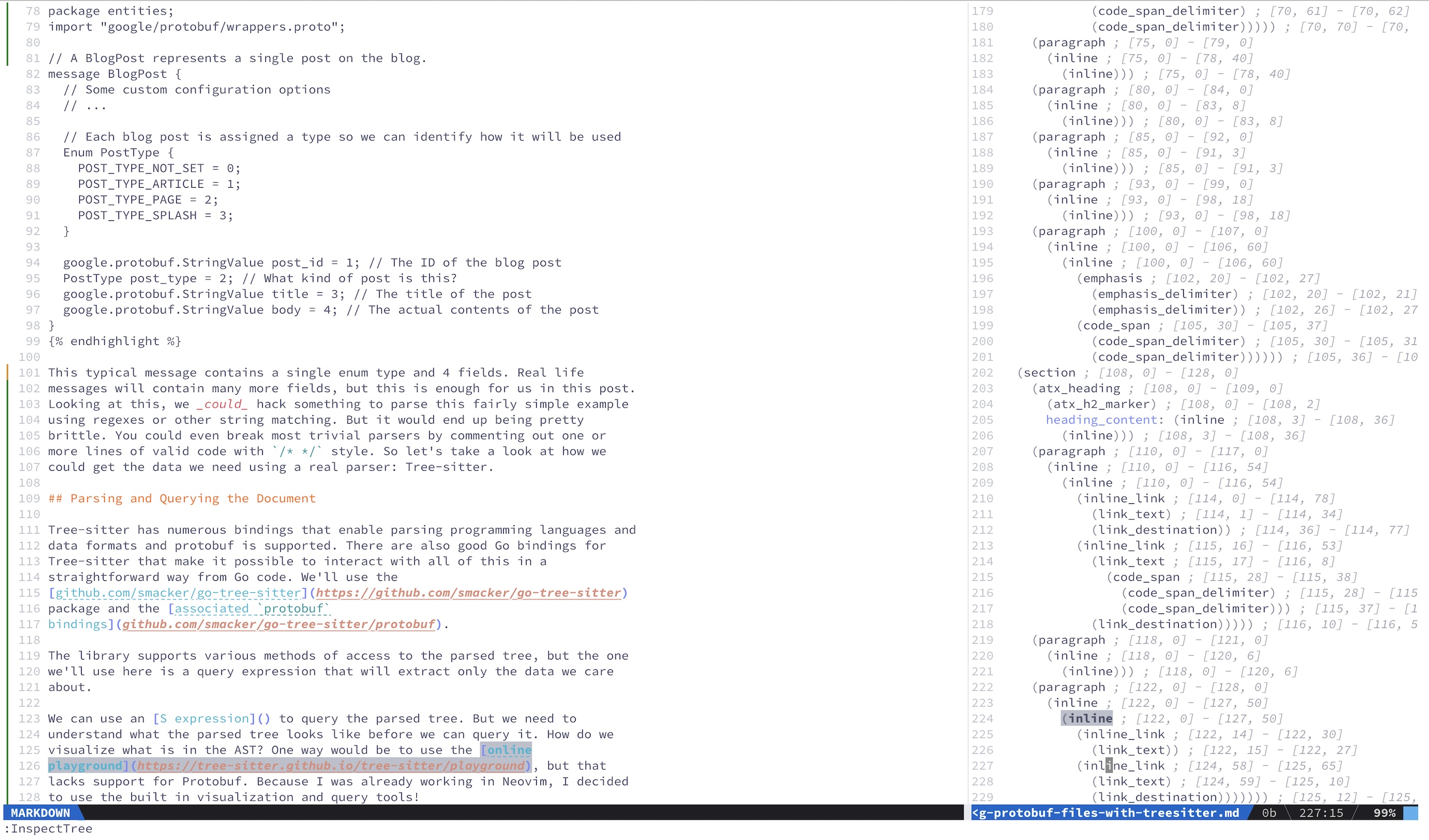

Inside Neovim you can run :InspectTree on any open document where the bindings

are included, and see a nice tree. Here is me running the inspector on the

source code for this blog post. (See if you can spot the code error)

In :InspectTree, if I highlight things in the document, I see them reflected

in the tree, and vice versa. This is invaluable for working with the queries,

since we can identify what each element in the AST actually is in the document,

live.

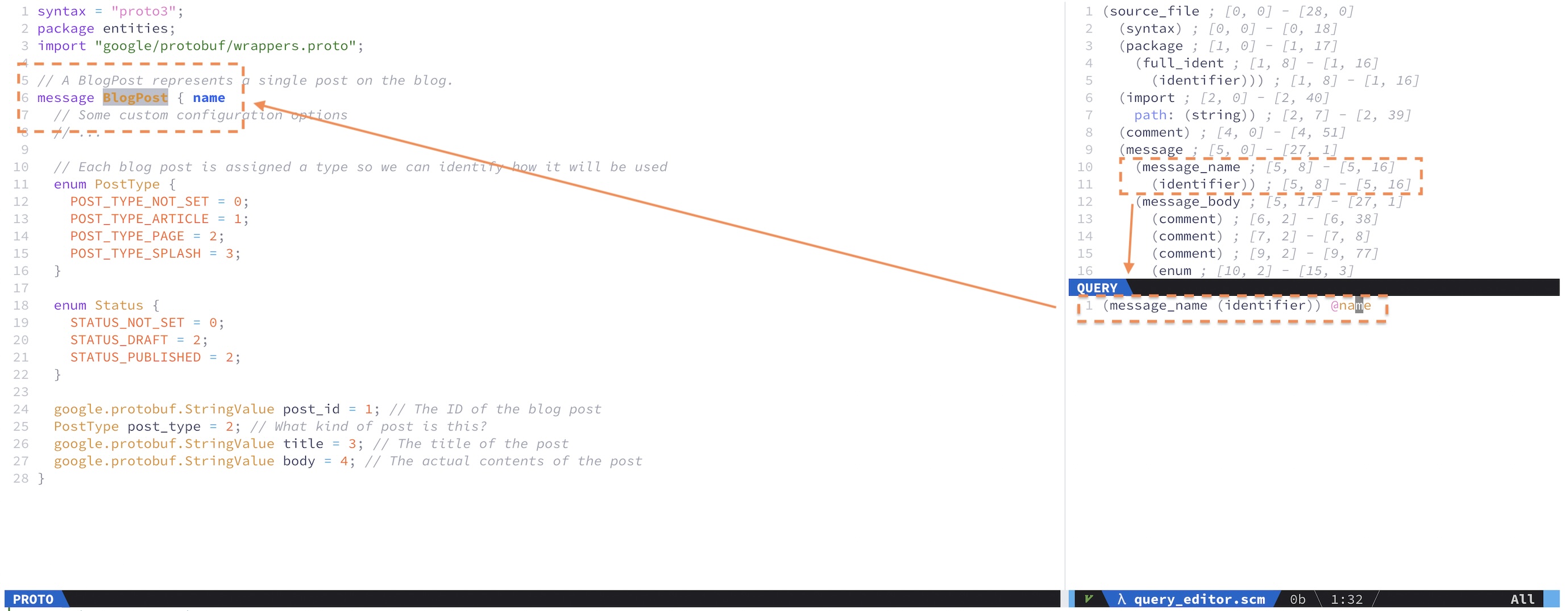

We can do the same thing for our Protobuf document. Then, it’s a matter of constructing a query to find and extract the parts of the document we want:

- Message Name

- Enum names, keys, and values

- Field names and types

Writing a query using the Neovim tools is also nice, and straightforward. From

the :InspectTree panel, you can open the query editor by typing :EditQuery.

This brings up another pane where we can type queries and see them reflected in

the original document via highlighting and annotation.

This is what writing a query looks like in the Neovim query window:

When I put the cursor over the named capture @name in the query, it

highlights any matched parts of the document. There are many ways to write the

queries that we might use here. You essentially just walk through the tree in

the viewer and mark the things you’d like to return as named captures.

The simplest query, shown in the screenshot, is to simply extract the message name:

(message_name (identifier)) @nameHere we found by inspecting the tree, that a message_name type is always

followed by an identifier. If we capture the identifier as @name we can

then refer to that capture when we want the message name. Then we can just

build it up from there.

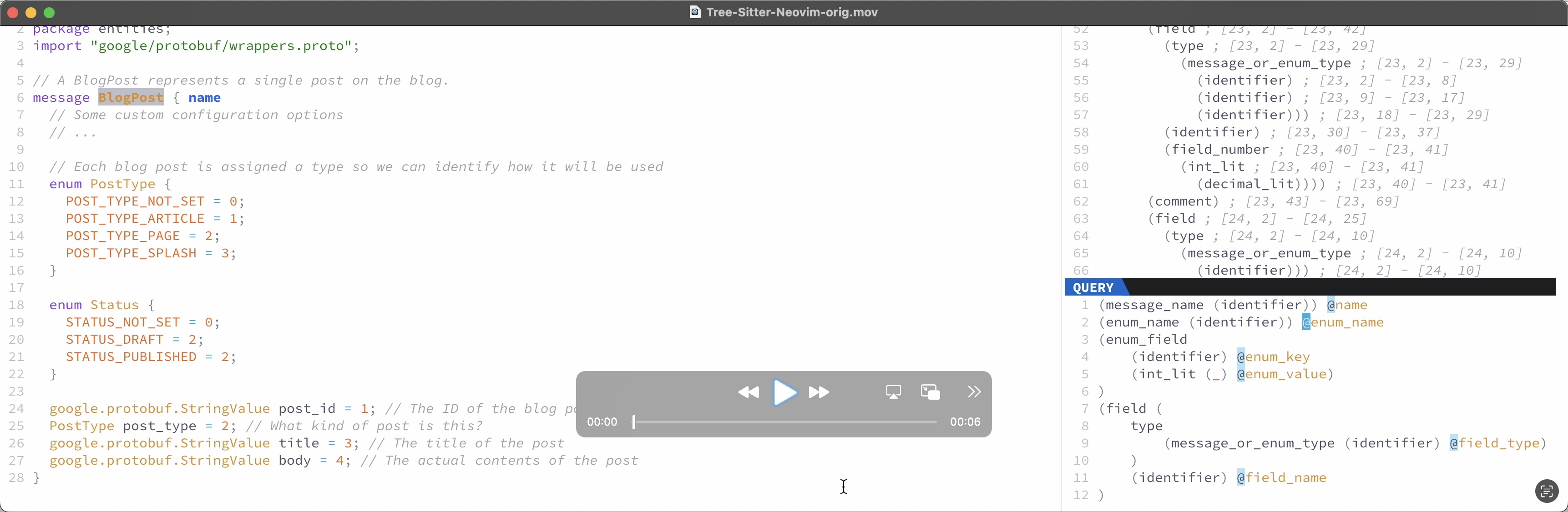

Here you can see me traversing a query that I built, and how the editor highlights the matches:

This is an example of a single query that will extract all of our required data

from the protobuf definition:

(message_name (identifier)) @message_name

(enum_name (identifier)) @enum_name

(enum_field

(identifier) @enum_key

(int_lit (_) @enum_value)

)

(field (

(type (message_or_enum_type)) @field_type

)

(identifier) @field_name

)Captures from the document will be returned by Tree-sitter in order. This is very helpful. We can then walk the results to generate a structure more easily reference in code. So let’s take a look at some Go code to interact with this document using the query we built.

Working with Tree-Sitter from Go

We need to import the two packages mentioned earlier. This is truncated for clarity: you will need other simple stdlib import.

import (

sitter "github.com/smacker/go-tree-sitter" // Tree-sitter bindings

"github.com/smacker/go-tree-sitter/protobuf" // Protobuf definitions

)We need some kind of data structure to store our parsed info in. The simplest starting point is something like this:

// A Message represents a single Protobuf message definition

type Message struct {

Name string

Fields map[string]string

Enums map[string]map[string]int

}You could, of course, use a more structured type if that suits your purpose better.

Then we need a function to read in the file and run it through the parser:

// ParseMessage parses the message file and returns a Message struct

func ParseMessage(filename string) (*Message, error) {

content, err := os.ReadFile(filename)

if err != nil {

return nil, fmt.Errorf("failed to read file: %w", err)

}

// Create a new parser

parser := sitter.NewParser()

parser.SetLanguage(protobuf.GetLanguage())

// Parse the content of the protobuf file

tree, err := parser.ParseCtx(context.Background(), nil, content)

if err != nil {

return nil, fmt.Errorf("failed to parse protobuf: %w", err)

}

fields, enums, err := GetMessageFields(tree, content)

if err != nil {

return nil, err

}

msg := &Message{

Name: name,

Fields: fields[name],

Enums: enums,

}

return msg, nil

}You will note in the above that the majority of the hard work is being done by

a function we have not seen yet: GetMessageFields(). That should look

something like this:

// GetMessageFields runs the Treesitter query and returns the two maps

func GetMessageFields(tree *sitter.Tree, content []byte) (map[string]map[string]string, map[string]map[string]int, error) {

query := `

(message_name (identifier)) @message_name

(enum_name (identifier)) @enum_name

(enum_field

(identifier) @enum_key

(int_lit (_) @enum_value)

)

(field (

(type (message_or_enum_type)) @field_type

)

(identifier) @field_name

)

`

q, qc, err := queryTree(tree, query)

if err != nil {

return nil, nil, err

}

fields := make(map[string]map[string]string)

enumFields := make(map[string]map[string]int)

var (

fieldName, fieldType, messageName string

enumName, enumKey, enumValue string

)

// Iterate over the matches and print the field names and types

for {

match, ok := qc.NextMatch()

if !ok {

break

}

for _, capture := range match.Captures {

node := capture.Node

captureName := q.CaptureNameForId(capture.Index)

switch captureName {

case "message_name":

messageName = node.Content(content)

fields[messageName] = make(map[string]string)

case "field_name":

fieldName = node.Content(content)

fields[messageName][fieldName] = fieldType

case "field_type":

fieldType = node.Content(content)

case "enum_name":

enumName = node.Content(content)

enumFields[enumName] = make(map[string]int)

case "enum_key":

enumKey = node.Content(content)

case "enum_value":

enumValue = node.Content(content)

// Treesitter thinks zeroes are octal, let's work around it

if strings.HasPrefix(enumValue, "0x") {

enumFields[enumName][enumKey] = 0

} else {

enumFields[enumName][enumKey], err = strconv.Atoi(enumValue)

if err != nil {

return nil, nil, err

}

}

default:

return nil, nil, fmt.Errorf("unexpected match type: %s", captureName)

}

}

}

return fields, enumFields, nil

}Here we define the query, ask Tree-sitter to kick off the query, and then we loop over the matches, inspecting their name and then building up the maps.

The last piece of code to show is the queryTree() function that kicks off the

query and cursor. It looks like this:

// queryTree runs a Treesitter query over a pre-existing tree

func queryTree(tree *sitter.Tree, query string) (*sitter.Query, *sitter.QueryCursor, error) {

q, err := sitter.NewQuery([]byte(query), protobuf.GetLanguage())

if err != nil {

return nil, nil, fmt.Errorf("failed to run query: %w", err)

}

// Execute the query

qc := sitter.NewQueryCursor()

qc.Exec(q, tree.RootNode())

return q, qc, nil

}And that’s pretty much the meat of it. We can call ParseMessage() and we get back

a Message{} struct that is populated with our message name, fields, and enums. In

JSON representation, it would look something like this:

{

"Enums": {

"PostType": {

"POST_TYPE_NOT_SET": 0,

"POST_TYPE_ARTICLE": 1,

"POST_TYPE_PAGE": 2,

"POST_TYPE_SPLASH": 3

}

},

"Fields": {

"post_id": "google.protobuf.StringValue",

"post_type": "PostType",

"title": "google.protobuf.StringValue"

"body": "google.protobuf.StringValue",

},

"Name": "BlogPost"

}And that’s it! It’s up to you what you do with this, but that gets you started. If you need to parse sub-types, you could design a query to do that. If you want to parse RPC definitions, you could do that, too. We use this information to generate out our bindings (which includes some logic).

Conclusion

This basis for tooling has been pretty good for us. I will undoubtedly bring Tree-sitter and the Neovim tooling to bear on other problems in the future. Hopefully this overview gets you a starting point.